//16.2 History

From Ancient Greece to ChatGPT: A Brief History of AI

We have all heard of AI, but when and how did the concept first start and become a reality?

Conceptualisation

-

- In 400 BC, the Greek poet Hesiod mentions a giant bronze man, widely believed to be Talos, a metal guardian built by the Greek god Hephaestus. This is one of the earliest concepts of a robot. Some scholars believe that Pandora was originally described as an artificial, evil woman built by Hephaestus.

A Greek vase painting, dating to about 450 B.C., depicts the death of Talos. (Wikimedia Commons/Forzaruvo94)

- Around 1495, Leonardo da Vinci designed and possibly constructed a mechanical knight operated by pulleys and cables. The robot could stand, sit, raise its visor and independently manoeuvre its arms with a fully functional jaw.

- In 1763, the Royal Society found English mathematician Thomas Bayes’s essay on probability and binomial parameters after his death. About 200 years later, his ideas became the basis for Bayesian interference and the Bayes Theorem became the leading concept in machine learning.

- In 1818, English novelist Mary Shelly published Frankenstein, or the Modern Prometheus. The tale about the man-made monster Victor Frankenstein is regarded as one of the earliest science fiction novels to bring similar AI concepts into pop culture.

- In 1843, English mathematician lady Ada Lovelace wrote seven pages of notes that comprised the world’s first modern computer programme. She and Charles Babbage proposed a mechanical general-purpose computer, the Analytical Engine, first recognising that the machine had applications beyond pure calculation.

- In 1912, Spanish engineer Leonardo Torres Quevedo built his first chess player called El Ajedrecista and presented it at the University of Paris in 1914. The device is considered the first computer game in history.

- In 1921, Czech playwright Karel Čapek released a science fiction play “R.U.R,” or “Rossum’s Universal Robots” which introduced the idea of “artificial people” named “robots.” This was the first known use of the word.

Foundation (1943-1950)

- In 1943, American neurophysiologist Warren McCulloch and logician Walter Pitts designed the first artificial neurons, laying the foundations for the study of neural networks and deep learning. This binary neuron model is designed to model simple logical operations through binary inputs and outputs (0 or 1).

- In 1946, the first general-purpose digital computer “ENIAC” (Electronic Numerical Integrator and Computer) was built at the University of Pennsylvania

Birth of AI (1950-1958)

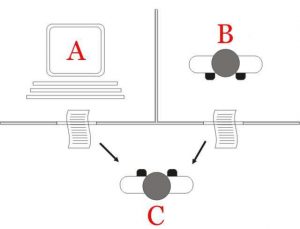

- In 1950, British mathematician Alan Turing, inspired by Ada Lovelace, published “Computer Machinery and Intelligence” and proposed a test of machine intelligence called The Imitation Game, widely known as the Turing Test. The paper posts the simple question, “Can machines think?” which has become the central and long term goal of all AI research. In the same year, Claude Shannon built Theseus, a remote-controlled mouse that was able to find its way out of a labyrinth and could remember its course.

- In 1955, American mathematician and computer scientist John McCarthy first coined the term “artificial intelligence” to describe the science and engineering of making machines intelligent.

- In 1958, Frank Rosenblatt developed the perceptron, an early ANN that could learn from data, laying the foundation for modern neural networks. John McCarthy created LISP (acronym for List Processing), the first programming language for AI research, which is still in popular use to this day.

Maturation (1959-1974)

- In 1959, Arthur Samuel created the term “machine learning” during a speech about teaching machines to play chess better than the humans who programmed them.

- In 1966, MIT professor Joseph Weizenbaum created the first “chatterbot” (later shortened to chatbot), ELIZA, a mock psychotherapist, that used natural language processing (NLP) to communicate with humans.

- In 1968, the famous science fiction film 2001: A Space Odyssey produced by American filmmaker Stanley Kubrick was released. The film about the implication of AI is believed to be one of the most controversial and influential of all sci-fi films.

- In 1969, Marvin Minsky and Seymour Papert published the book Perceptrons: An Introduction to Computational Geometry, providing the first systematic study of parallelism in computation and marking a historical turn in AI.

First AI Winter (1974-1980)

- In 1979, the American Association of Artificial Intelligence, now known as the Association for the Advancement of Artificial Intelligence (AAAI) was founded.

- In 1980, the first national conference of the AAAI was held at Stanford University.

Booming Period (1980-1987)

- In 1981, the Japanese government allocated $850 million to the Fifth Generation Computer project, intending to create computers that could translate, converse in human language, and express reasoning on a human level.

- In 1984, Marvin Minsky and Roger Schank coined the term AI winter at a meeting of the Association for the Advancement of Artificial Intelligence where funding and interest would decrease, and make research significantly more difficult.

- In 1985, British-born Artist Harold Cohen demonstrated an autonomous drawing program known as AARON at the AAAI conference. This work took Cohen 20 years to develop and is the first generation of computer-generated art.

- In 1986, Ernst Dickmann and his team at Bundeswehr University of Munich created and demonstrated the first driverless car. It could drive up to 55 mph on roads that didn’t have other obstacles or human drivers.

Second AI Winter (1987-2000)

- In 1988, computer programmer Rollo Carpenter invented the chatbot Jabberwacky, which he programmed to provide interesting and entertaining conversations to humans.

- In 1997, Deep Blue (developed by IBM) beat the world chess champion, Gary Kasparov, in a highly-publicised match, becoming the first programme to beat a human chess champion. In the same year, Windows released a speech recognition software developed by Dragon Systems.

Revival and Emergence of Machine Learning (2000-2010)

- In 2000, Professor Cynthia Breazeal developed the first robot that could simulate human emotions with its face, which included eyes, eyebrows, ears, and a mouth. It was called Kismet.

- In 2003, NASA landed two rovers onto Mars (Spirit and Opportunity) and they navigated the surface of the planet without human intervention.

- In 2006, Geoffrey Hinton published a paper on deep learning, laying the foundation for the recent AI revolution. AI entered the business world as companies such as Twitter, Facebook, and Netflix started utilizing AI as a part of their advertising and user experience (UX) algorithms.

- In 2009, Rajat Raina, Anand Madhavan and Andrew Ng published 「Large-Scale Deep Unsupervised Learning Using Graphics Processors,」 presenting the idea of using GPUs to train large neural networks.

- In 2010, Microsoft launched the Xbox 360 Kinect, the first gaming hardware designed to track body movement and translate it into gaming directions.

New Bloom (2011- )

- In 2011, an NLP computer programmed to answer questions named Watson (created by IBM) won Jeopardy against two former champions in a televised game. Apple released Siri, bringing AI-powered virtual assistants into the mainstream.

- In 2012, Google’s AI system demonstrated the ability to recognise cats in YouTube videos.

- In 2014, the Eugene computer program passed the Turing Test by convincing a third of the judges participating in the experiment that it was a human being. Ian Goodfellow and his colleagues introduced Generative Adversarial Networks (GANs), which enable AI systems to generate highly realistic images, videos, and other media.

- In 2016, 20 years after the Kasparov chess match with Deep Blue, DeepMind’s AlphaGo programme, based on a deep neural network, beats Lee Sodol, the world Go champion in five games.

- In 2018, the Turing Award, or the “Nobel Prize of Computing,” was awarded to Yoshua Bengio, Geoffrey Hinton, and Yann LeCun, whose work kicked off the world of deep learning we see today. The trio of researchers are often referred to as the 「Godfathers of Deep Learning.」

- OpenAI released GPT (Generative Pre-trained Transformer), paving the way for subsequent LLMs.

- In 2019, Microsoft launched the Turing Natural Language Generation generative language model with 17 billion parameters.

- In 2020, Open AI released the GPT-3, a large language model consisting of 175 billion parameters to generate humanlike text models. It started beta testing GPT-3, a model that uses Deep Learning to create code, poetry, and other such language and writing tasks. While not the first of its kind, it is the first that creates content almost indistinguishable from those created by humans.

- In 2021, OpenAI developed DALL-E, which can process and understand images enough to produce accurate captions, moving AI one step closer to understanding the visual world.

- In 2022, OpenAI released ChatGPT in November to provide a chat-based interface to its GPT-3.5 LLM.

- In 2023, OpenAI announced the GPT-4 multimodal LLM that receives both text and image prompts. ■

Terms that you should know:

NLP (Natural Language Processing) is a field of AI that deals with enabling computers to understand, process, and generate human language data.

LLMs (Large Language Models) are neural network models trained on massive text datasets to generate human-like language outputs for various natural language tasks.

GANs (Generative Adversarial Networks) are a type of deep learning architecture that involves two neural networks, Generative Network and Discriminator Network, competing against each other in a game-theoretic manner.

GPT (Generative Pre-trained Transformer) refers to a family of large language models developed by OpenAI, with GPT-3 and ChatGPT being the most well-known examples that can generate human-like text based on prompts.